The current abundance of AI systems sometimes stumps: conversational, adaptive, generative, agentic — each category highlights a different way machines can interact with information, users, and environments. And as AI development accelerates, we can expect even more classifications to appear.

What makes the picture even more complex is that these high-level categories aren’t standalone. Every type of AI branches further into subtypes, specialized for particular use cases. This layered structure makes AI at once fascinating and challenging to navigate: a single system may combine traits from several categories, blurring the lines between them.

Today, let’s take a closer look at agentic AI — a class of systems designed to operate with the highest possible level of autonomy and make decisions with minimal human intervention. Let’s explore its major types, implementation challenges, and growth hurdles in this blog post.

Key Highlights

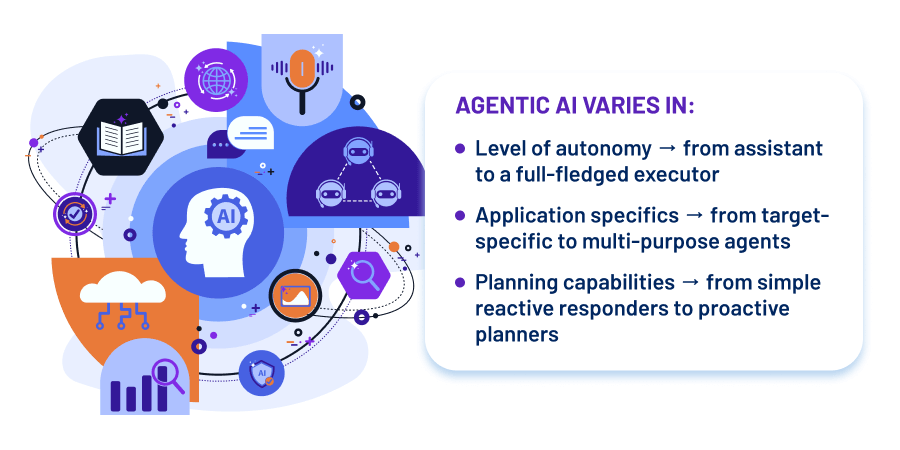

- Agentic AI stands out for autonomy: from human-assisted suggestions to fully independent decision-making

- Agentic AI systems vary in the level of autonomy, application specifics, and planning abilities

- Multi-agent ecosystems discover new possibilities but struggle with context management and synchronization between agents

- Scaling agentic AI means balancing engineering challenges with legal, ethical, and business constraints

The Essence of Agentic AI and Its Variations Explained

Sometimes, agentic AI is still perceived as an ordinary chatbot. And indeed, the difference between these two is not always obvious: at first glance, both interact with the user in a conversational format. However, the key distinction is that agentic AI is not limited to just answering queries; it can also take autonomous actions to achieve a result.

While a traditional chatbot is reactive and simply provides information or advice within its knowledge base, an agent can actively interact with external systems. It can analyze a task, build a sequence of steps, and execute it: request data from third-party services, launch a file, adjust program settings, or even deploy an application to the cloud. In other words, it gains an interface to the real world.

Find out the Distinctions and Similarities of AI Types

This boundary is somewhat fluid: modern models like ChatGPT, when connected to tools or the Internet, already move beyond being “just a chatbot” and start functioning as agents. A good example is the integration of AI into development environments.

Initially, such systems only suggested code snippets, but over time, they evolved to run programs, test results, and even deploy projects to servers. Thus, agentic AI represents a shift from an ordinary “responder” to “executor,” with the focus on autonomy and the ability to carry a task through to concrete action.

However, these systems also differ by various parameters, which we’ll point out below.

The Level of Autonomy

One of the most evident ways to classify AI agents is by their level of autonomy. On this scale, we can distinguish between systems that merely assist humans and those that act entirely on their own.

At the lowest level are agents that only suggest possible actions, while the final decision always rests with a human. This approach is often used in high-risk domains. For instance, in fintech and banking, an agent may calculate optimal steps or propose an investment strategy, but a controller, in the face of a human of flesh and blood, takes responsibility to confirm or reject the suggestion. This setup reduces the chance of errors and keeps processes under control.

Further along the scale are semi-autonomous agents, which can carry out tasks with minimal supervision but still require periodic approval or monitoring.

At the far end of the spectrum are highly autonomous agents entrusted with making and executing decisions independently, guided by defined goals and constraints. In finance, these can be portfolio management systems that have the ability to automatically invest funds. A human simply sets the parameters, say, “We have one million dollars, distribute the capital most effectively,” and the agent independently determines the investment steps.

Of course, even fully autonomous systems include layers of risk management and safeguards. The higher the autonomy, the greater the potential risks are. Yet, these systems clearly illustrate how AI is transforming from a supporting tool into an active decision-maker within complex processes.

Check out how AI Works for Investment Management

Application Specifics

Another way to classify AI agents is by the scope of tasks they can handle. Here we can distinguish between narrowly specialized systems and broad, general-purpose assistants.

Specialized agents operate within a specific domain. Continuing the example of the banking sphere, an agent might help a client manage personal finances: distribute currency risks across accounts, optimize savings, or recommend a profitable conversion. Such systems solve clearly defined problems and function within a limited set of capabilities.

Moving further, there are multi-purpose agents that take a broader view of the user’s goals. In the same banking context, such an agent could help a client save for a down payment, build a mortgage repayment strategy, suggest investment tools, and, at the same time, connect them with additional banking products.

Finally, there exist general-purpose agents — digital assistants capable of fulfilling almost any whim (within reasonable limits, of course). They can arrange a vacation, find and book the cheapest and most convenient tickets and hotels, design an itinerary, and, in another context, help a user choose an appliance, compare offers, and even finalize the purchase. These systems act as personal concierges, ready to assist with day-to-day tasks.

For now, general-purpose agents are still in their early stages and are being adopted quite reluctantly, which is totally understandable. The market is not fully ready to delegate critical decisions to them, and technical limitations remain. Yet, the trend is clear: as external integrations and services (from delivery to ride-hailing) evolve, these agents will become more practical, reliable, and widely adopted.

Curious how AI can contribute to your workflows?

FIND IT OUT!Planning Abilities

Also, we can classify AI agents by their ability to plan actions. At the simplest level, there are reactive agents, which operate on a direct question–response model. All is simple: an event occurs — the agent reacts immediately. For example, a user submits a request, and the system processes it without considering broader context or future needs.

On the other hand, there are planning agents that can anticipate requirements and act proactively. Imagine a digital assistant that knows its owner wears suits and needs them dry-cleaned regularly. Instead of waiting for a request, it can recognize when it’s time for cleaning, schedule a courier, and arrange the return, and these actions are taken regularly without any user input.

This planning ability is equally valuable in the business world. An agent might function as an “office concierge,” automatically ordering supplies, arranging cleaning, monitoring equipment status, or issuing building passes. In doing so, it removes routine overhead and ensures operations run smoothly, without the need to take care of minor but important things.

Highly autonomous or semi-autonomous agentic AI systems are best for real-time decision-making. These agents can act independently or with minimal supervision, analyze incoming data, and execute tasks on the fly, making them ideal for scenarios like financial trading, fraud detection, or operational monitoring where immediate, informed decisions are critical.

Agentic AI Combinations, Reasoning Agents, and Multi-Agent Systems

Obviously, all agent types we mentioned in the previous paragraph cannot be “pure” reactive or target-specific agents — these types can be easily combined with each other. The same systems can simultaneously respond to current events, maintain long-term goals, and plan steps years in advance. Within this context, we can highlight several directions.

Combination of Agents

Combined agents integrate different approaches: they can respond to individual requests while also elaborating on long-term strategies. For example, a financial agent within a banking app might suggest currency operations in real time while simultaneously calculating a 10-year savings plan. Such systems require long-term memory, an action log, and connections to external services to manage user assets in the most effective manner.

Reasoning Agents

Such agents often refer to a complex solution centered around a reasoning LLM — a model capable not just of generating probable answers but of reasoning actively. It can conduct an internal “dialogue with itself,” ask clarifying questions, and form strategies.

For instance, when tasked with helping a client save for a house in five years, a reasoning agent will evaluate current assets, market forecasts, available banking products, and even ask the client for additional information if needed. These agents rely on a combination of short-term and long-term memory, system prompts, and connectors to external services.

Read about the Difference Between LLMs and SLMs

Reactive agents respond immediately to inputs or events without considering broader context or future consequences. Reasoning agents, on the other hand, leverage machine learning models to analyze data, anticipate outcomes, and plan multi-step actions proactively. They can weigh options, ask clarifying questions, and coordinate across tasks, making them suitable for complex workflows that require foresight and adaptability.

Multi-Agent Systems

While a single agent acts as an independent executor, multi-agent systems function like a team of specialists distributing roles and tasks. Let’s take a software development team consisting of an architect, backend and frontend developers, a tester, an analyst, and a project manager as an example.

Each agent is responsible for its area of expertise, whether a specific technology, domain, or architectural component. They interact through shared memory (for example, a code repository or task management system), exchange messages, and gradually assemble the complete solution. Although this architecture is still actively researched, it already demonstrates potential for automating complex projects.

Not sure which agentic system suits you most?

CONTACT US!Keeping Agents in Sync and Context Management as the Biggest Challenges for Multi-Agent Systems

When speaking about complex multi-agent systems, where each agent performs its concrete role and carries out particular tasks, most likely you’ll be puzzled about how to ensure well-coordinated interaction between them. And yes, the risk of desynchronization occurs when agents fail to understand each other or operate on incomplete data.

This issue is directly tied to context management. The knowledge that agents rely on needs to be accumulated, structured, and taken into account. At the same time, large language models work within a limited context window, and only the most relevant information should be placed there.

GenAI for Business

Watch our webinar to uncover how to integrate GenAI for improved productivity and decisions.

The effectiveness of a multi-agent system mostly depends on how well this window is filled. If irrelevant or noisy data makes its way in, the model’s output will be equally “noisy.” While context windows are gradually expanding, AI tools are also emerging to manage context more intelligently.

Within such systems, there is often a dedicated service layer of agents. For example, a coordinator agent, whose role is to gather the right context from multiple sources: code repositories, Jira tasks, Confluence documentation, and other knowledge bases. The coordinator structures this information and provides it to specialized agents that carry out specific tasks.

In general, such kind of workflow resembles the role of a project manager or team coordinator in a real-world setting: someone who keeps the overall goal in mind, has access to all knowledge sources, and ensures that the entire team is on the same page. However, it remains an open question whether copying “human” processes is the most efficient way to organize multi-agent systems, and the search for more optimal architectures is only just beginning.

Degree of Mistrust and Safety Concerns Relative to Agentic AI

The risk of desynchronization and context management is just half bad. Unfortunately, there are more serious and pressing issues to consider, which are the question of trust in AI systems and their security in general.

By design, an agent is autonomous, capable of making decisions and acting without constant human oversight. The higher the degree of autonomy, the more pressing the question becomes: can we entrust it with money, managerial decisions, or mission-critical business processes?

This creates a dilemma: on the one hand, agents promise efficiency and automation. On the other, poor decisions could lead to financial losses, reputational damage, or even business disruption. That is why the interface between humans and agent systems remains essential: it should strike a balance between allowing agents to act independently and enabling humans to interfere when necessary. At the same time, users should not be overloaded with information, and only critical signals should surface to their attention.

One part of the solution is strict risk management frameworks. In finance, for example, this could mean predefined loss limits, automatic kill switch modules that shut down algorithms in critical scenarios, or dedicated subsystems for monitoring operations. Similar approaches can apply to general-purpose agents: for instance, hard caps on transaction amounts or mandatory user confirmation for certain actions.

Another emerging concept is that of “controller agents” whose task is to oversee the behavior of other agents, monitor their memory and decision-making, and block risky actions. Such controllers can enforce financial, legal, or ethical safeguards. Here, we can say that this creates a second layer of supervision built directly into the architecture of agent systems.

Explore Generative AI Risks and Regulatory Issues

Yes. Certain variations of agentic AI are inherently safer because they operate with lower autonomy or within narrowly defined domains, reducing the risk of errors or unintended actions. Highly autonomous or general-purpose agents, while more powerful, require additional safeguards like controller agents, human-in-the-loop oversight, and strict risk management frameworks to ensure secure and reliable operation.

Growing Your Agentic AI System. So Simple and So Complex at Once

At first glance, growing agentic systems seems to be a walk in the park. Modern LLMs come out of the box as universal tools, capable of handling conversations on nearly any topic and resolving a wide range of tasks. Adding a new agent to a multi-agent architecture today can take just minutes: all you need is just to define its role and basic competencies.

Yet behind this simplicity lies deep complexity. A truly universal agent must interact with an endless variety of external systems: from booking platforms and payment services to corporate repositories. These systems are not always integration-friendly: some require official APIs and agreements to protect their business models. As a result, scaling agentic ecosystems is not only an engineering challenge but also one of law, policy, and business strategy.

There are technical barriers as well. Each new component adds computational load: the more agents and oversight layers (for safety or compliance), the higher the latency and operational cost. In practice, this forces teams to balance between general-purpose models and specialized ones that are cheaper and optimized for narrower tasks.

And all of this ultimately depends on hardware. LLMs are computationally expensive, and the chip market remains concentrated in the hands of a few players. New architectures promise dramatic speedups and cost reductions, but for now, scaling remains costly.

Key Takeaways

Agentic AI represents one of the most powerful and meanwhile, most complex variations of artificial intelligence. These systems can operate with a remarkable degree of autonomy, making decisions with minimal human involvement, or in some cases, none at all.

But we should always keep in mind that with great autonomy comes equally great responsibility. The challenges are not only technical, such as context management, latency, and integration, but also strategic and ethical: trust, safety, compliance, and risk management. That’s why growing such systems is always a dilemma to resolve, since each new agent added to a system increases both its potential and its fragility.

To implement agentic AI systems securely and maintain their effective functioning, you can’t do without a professional development team. Reach out to us; we’ll help you get the most out of the capabilities of artificial intelligence!